Uncovering the Power of Density-Based Clustering with DBSCAN

- Introduction to Density-Based Clustering

- Understanding the Key Concepts of DBSCAN

- How DBSCAN Works: A Step-by-Step Explanation

- Advantages of DBSCAN

- Limitations of DBSCAN

- Real-World Use Cases of DBSCAN

- Here are a few best practices to keep in mind when applying DBSCAN:

- Conclusion

- Further Readings and Resources:

Introduction to Density-Based Clustering

Density-based clustering is a powerful unsupervised machine learning technique that aims to identify dense regions of data points and group them into clusters. Unlike other clustering algorithms like K-means, which require specifying the number of clusters in advance, density-based clustering algorithms can automatically discover clusters of arbitrary shape and size based on the density of data points in the feature space

One of the most popular and widely used density-based clustering algorithms is DBSCAN (Density-Based Spatial Clustering of Applications with Noise). DBSCAN was proposed by Martin Ester, Hans-Peter Kriegel, Jörg Sander, and Xiaowei Xu in 1996 and has since become a go-to choice for clustering tasks in various domains.

In this blog post, we will dive deep into the concepts behind DBSCAN, understand its working principles, explore its advantages and limitations, and discuss real-world use cases where DBSCAN shines. So, let’s get started!

Understanding the Key Concepts of DBSCAN

To grasp the inner workings of DBSCAN, it’s essential to understand a few key concepts:

- Epsilon (ε): Epsilon is a distance measure that defines the neighborhood around a data point. It determines the radius within which other points are considered neighbors.

- MinPts: MinPts is the minimum number of points required to form a dense region. It specifies the minimum number of points that should be within the epsilon distance of a point for it to be considered a core point.

- Core Point: A data point is classified as a core point if it has at least MinPts number of points within its epsilon neighborhood, including itself.

- Border Point: A data point is classified as a border point if it is within the epsilon neighborhood of a core point but does not have enough neighbors to be a core point itself.

- Noise Point: A data point is considered a noise point if it is neither a core point nor a border point. Noise points do not belong to any cluster.

With these concepts in mind, let’s explore how DBSCAN works step by step.

How DBSCAN Works: A Step-by-Step Explanation

The DBSCAN algorithm follows a simple yet effective approach to identify clusters based on density. Here’s a step-by-step explanation of how DBSCAN works

- Select an arbitrary unvisited point from the dataset.

- Retrieve all points that are within the epsilon distance of the selected point.

- If the number of points in the epsilon neighborhood is greater than or equal to MinPts, the selected point is marked as a core point, and a new cluster is started. All points within the epsilon neighborhood are added to the cluster.

- Iteratively expand the cluster by examining each point in the cluster. For each point, retrieve its epsilon neighborhood and add any unvisited core points to the cluster. Repeat this process until no more points can be added to the cluster.

- If the selected point is not a core point and does not belong to any cluster, mark it as a noise point.

- Move to the next unvisited point in the dataset and repeat steps 2-5 until all points have been visited.

By following these steps, DBSCAN identifies dense regions of points and groups them into clusters while effectively handling noise points that do not belong to any cluster.

Advantages of DBSCAN

DBSCAN offers several advantages over other clustering algorithms:

- Automatically Discovering Clusters: DBSCAN does not require specifying the number of clusters in advance. It automatically discovers clusters based on the density of points in the feature space.

- Handling Arbitrary Shaped Clusters: DBSCAN can identify clusters of arbitrary shape and size, making it suitable for datasets with non-spherical or irregularly shaped clusters.

- Robustness to Noise: DBSCAN is robust to noise and outliers. It effectively handles noise points by classifying them as such and excluding them from the clusters.

- Scalability: DBSCAN has a time complexity of O(n log n) for low-dimensional data and O(n^2) for high-dimensional data, making it relatively scalable compared to other clustering algorithms.

Limitations of DBSCAN

While DBSCAN is a powerful clustering algorithm, it has a few limitations to consider:

- Sensitivity to Parameters: DBSCAN is sensitive to the choice of epsilon and MinPts parameters. Different parameter values can lead to different clustering results, and finding the optimal values may require domain knowledge or parameter tuning.

- Difficulty with Varying Density Clusters: DBSCAN may struggle with datasets that have clusters of varying densities. It may merge clusters with similar densities or split clusters with different densities.

- Curse of Dimensionality: DBSCAN’s performance can degrade in high-dimensional spaces due to the curse of dimensionality. As the number of dimensions increases, the notion of density becomes less meaningful, and the algorithm may struggle to identify meaningful clusters.

Real-World Use Cases of DBSCAN

DBSCAN finds applications in various domains where identifying dense regions of data points is crucial. Let’s explore a few real-world use cases:

- Anomaly Detection: DBSCAN can be used for anomaly detection by identifying data points that do not belong to any cluster. These points can be considered anomalies or outliers. For example, in fraud detection, DBSCAN can identify unusual transactions that deviate from the normal behavior.

- Image Segmentation: DBSCAN can be applied to image segmentation tasks, where the goal is to partition an image into multiple segments based on pixel similarity. By treating pixels as data points in a feature space, DBSCAN can identify regions of similar pixels and group them into segments.

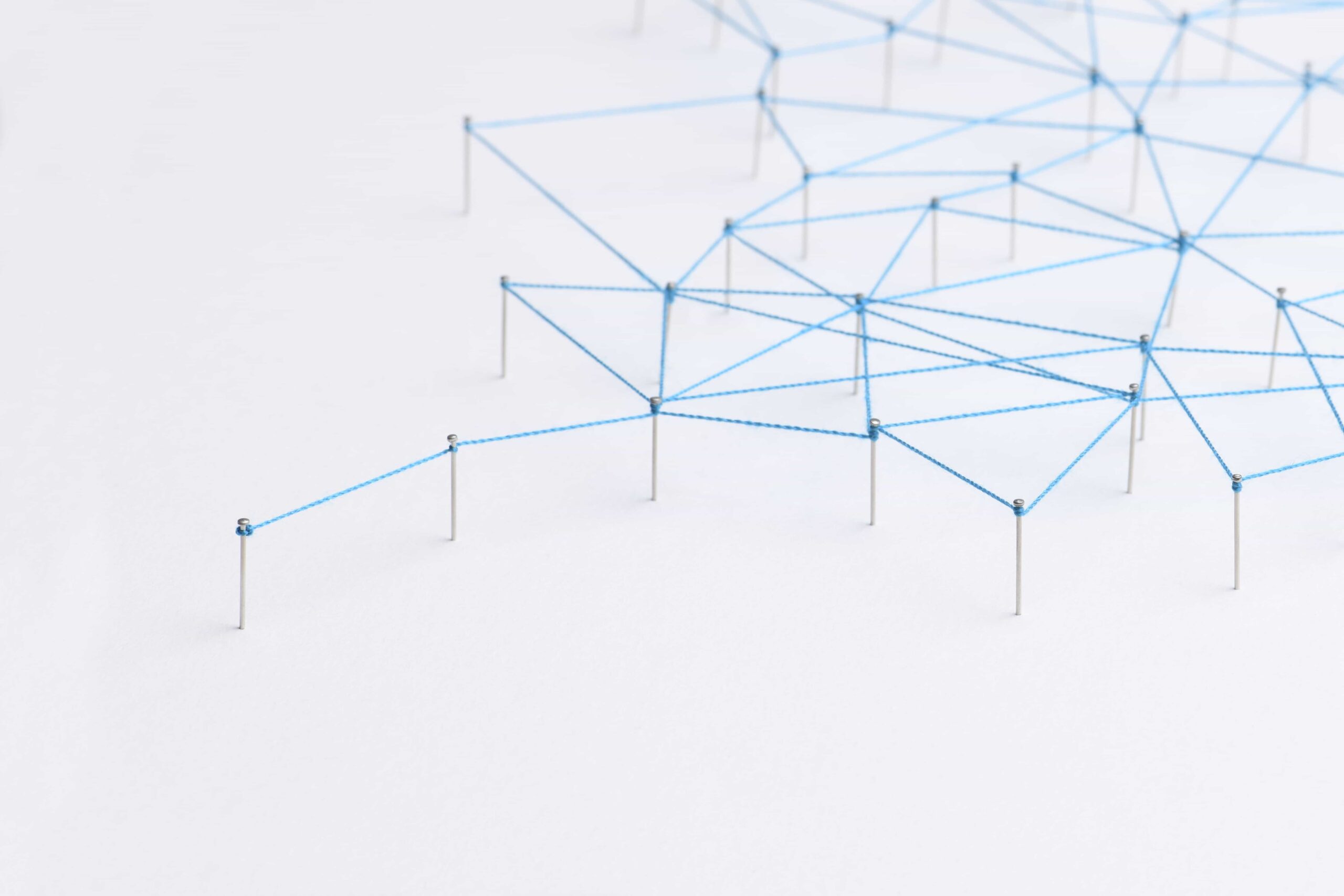

- Geographic Data Analysis: DBSCAN is well-suited for analyzing geographic data and identifying clusters of points based on their spatial proximity. It can be used to discover regions with high population density, identify hotspots of crime or disease outbreaks, or segment customers based on their geographic location.

- Social Network Analysis: DBSCAN can be used to identify communities or clusters within social networks based on the density of connections between individuals. It can help in understanding the structure and dynamics of social networks and identifying influential groups or individuals.

from sklearn.cluster import DBSCAN

from sklearn.preprocessing import StandardScaler

# Assuming X is the feature matrix representing customer data

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# Apply DBSCAN with epsilon=0.5 and MinPts=5

dbscan = DBSCAN(eps=0.5, min_samples=5)

labels = dbscan.fit_predict(X_scaled)

# Analyze the resulting clusters

n_clusters = len(set(labels)) - (1 if -1 in labels else 0)

print(f"Number of clusters: {n_clusters}")

for i in range(n_clusters):

cluster_points = X[labels == i]

print(f"Cluster {i}: {len(cluster_points)} points")

# Identify noise points

noise_points = X[labels == -1]

print(f"Noise points: {len(noise_points)}")By analyzing the resulting clusters, the retail business can gain insights into different customer segments and tailor their marketing strategies, product recommendations, and personalized offers accordingly.

Here are a few best practices to keep in mind when applying DBSCAN:

- Normalize or standardize the features to ensure that the distance metric used by DBSCAN is meaningful.

- Experiment with different values of epsilon and MinPts to find the optimal parameter settings for your specific dataset. Use domain knowledge and visualization techniques to guide the parameter selection process.

- Evaluate the quality of the resulting clusters using appropriate metrics such as silhouette score or Davies-Bouldin index. Compare the results with other clustering algorithms to assess the suitability of DBSCAN for your task.

- Consider the scalability of DBSCAN for large datasets. While DBSCAN has a relatively low time complexity, it may still be computationally expensive for massive datasets. In such cases, consider using approximate or parallel implementations of DBSCAN.

- Analyze the noise points identified by DBSCAN carefully. They may represent outliers or anomalies that require further investigation or special handling.

Conclusion

In conclusion, DBSCAN is a powerful density-based clustering algorithm that offers a flexible and intuitive approach to discovering clusters in datasets. By understanding its concepts, advantages, limitations, and real-world applications, data scientists and machine learning practitioners can leverage DBSCAN effectively to gain insights from their data and solve complex clustering problems.

Remember, clustering is an exploratory data analysis technique, and the results should be interpreted in the context of the domain and the specific problem at hand. It’s always a good practice to validate the clustering results with domain experts and incorporate their feedback to refine the clustering process.

We hope this deep dive into DBSCAN has provided you with a comprehensive understanding of density-based clustering and its potential applications. Happy clustering!

Further Readings and Resources:

- Original DBSCAN paper: Density-Based Clustering in Spatial Databases: The Algorithm GDBSCAN and Its Applications

- Scikit-learn documentation on DBSCAN: DBSCAN Clustering

- Tutorial on DBSCAN with Python: DBSCAN Clustering in Python