Single Shot vs Multi Shot: A Comprehensive Guide to LLM Evaluation Techniques

- Introduction

- Understanding LLM Evaluation

- Single Shot Evaluation: In-Depth Analysis

- Multi Shot Evaluation: A Deeper Dive

- Comparative Analysis: Single Shot vs Multi Shot

- Advanced Evaluation Techniques

- Ethical Considerations in LLM Evaluation

- Best Practices for LLM Evaluation

- The Future of LLM Evaluation

- Conclusion

Introduction

Large Language Models (LLMs) have become a cornerstone of modern natural language processing, powering applications from chatbots to content generation systems. As these models grow in complexity and capability, the challenge of accurately evaluating their performance becomes increasingly crucial. Two primary evaluation paradigms have emerged: “single shot” and “multi shot” techniques. In this comprehensive guide, we’ll delve deep into these methods, exploring their nuances, applications, and implications for the future of AI assessment.

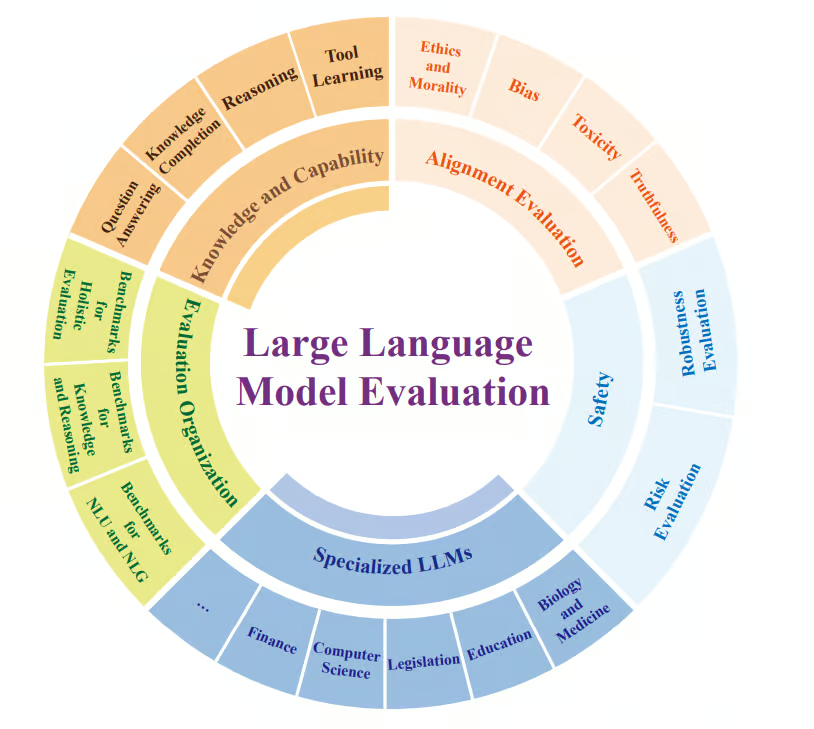

Understanding LLM Evaluation

Before we dive into specific techniques, it’s important to understand the broader context of LLM evaluation. Evaluation serves several critical purposes:

- Benchmarking: Comparing different models or versions of the same model.

- Capability Assessment: Understanding what tasks a model can and cannot perform.

- Improvement Guidance: Identifying areas where models need refinement.

- Ethical Considerations: Uncovering potential biases or harmful outputs.

- Deployment Readiness: Determining if a model is suitable for real-world applications.

With these goals in mind, let’s explore the two main evaluation paradigms.

Image Source : https://arxiv.org/pdf/2310.19736

Single Shot Evaluation: In-Depth Analysis

Definition and Methodology

Single shot evaluation, also known as zero-shot evaluation, involves testing an LLM’s performance on a task without providing any examples or additional context. The model is given a prompt or question and must generate a response based solely on its pre-trained knowledge.

Example: Prompt: “Translate the following English sentence to French: ‘The cat is on the table.'” Expected Output: “Le chat est sur la table.”

Advantages of Single Shot Evaluation

- Simplicity: Easy to implement and requires minimal setup, making it ideal for quick assessments.

- Tests Raw Knowledge: Assesses the model’s inherent understanding without additional priming, revealing its true capabilities.

- Mimics Real-World Use: Often reflects how models are used in production environments where example-based training isn’t feasible.

- Generalization Assessment: Helps evaluate how well the model generalizes to unseen tasks.

- Efficiency: Requires less computational resources and time compared to multi shot evaluations.

Disadvantages of Single Shot Evaluation

- High Variance: Performance can vary significantly based on prompt wording, leading to inconsistent results.

- Limited Task Understanding: The model may misinterpret the task without examples, especially for complex or ambiguous prompts.

- Underestimates Potential: May not fully showcase the model’s capabilities with proper context or fine-tuning.

- Sensitivity to Phrasing: Small changes in prompt wording can lead to drastically different outputs.

- Difficulty with Specialized Tasks: Struggles to evaluate performance on domain-specific or highly technical tasks.

Use Cases for Single Shot Evaluation

- General Knowledge Assessment: Testing the model’s broad understanding across various topics.

- Language Translation: Evaluating basic translation capabilities without context.

- Sentiment Analysis: Assessing the model’s ability to determine sentiment from standalone statements.

- Question Answering: Testing factual recall and inference on straightforward questions.

- Text Summarization: Evaluating the model’s ability to condense information without examples.

Multi Shot Evaluation: A Deeper Dive

Definition and Methodology

Multi shot evaluation, also called few-shot evaluation, provides the LLM with one or more examples of the desired task before asking it to perform. This can range from one example (one-shot) to several (few-shot).

Example (Two-Shot): Prompt: “Translate the following sentences from English to Spanish:

Input: ‘Hello, how are you?’ Output: ‘¿Hola, cómo estás?’

Input: ‘I like to eat pizza.’ Output: ‘Me gusta comer pizza.’

Now translate: ‘The weather is nice today.'”

Expected Output: “El clima está agradable hoy.”

Advantages of Multi Shot Evaluation

- Improved Performance: Models often perform better with task-specific examples, leading to more accurate evaluations.

- Clear Task Definition: Examples help clarify the expected output format and style, reducing ambiguity.

- Flexibility: Allows testing of more complex or nuanced tasks that may be difficult to explain without examples.

- Context Simulation: Can better mimic real-world scenarios where some context or examples are available.

- Fine-Tuning Assessment: Helps evaluate how well models can adapt to specific tasks with minimal additional training.

Disadvantages of Multi Shot Evaluation

- Potential for Overfitting: Models may rely too heavily on the given examples, leading to poor generalization.

- Increased Complexity: Requires careful selection and curation of examples to avoid biasing the evaluation.

- Less Representative of Zero-Shot Abilities: May not reflect real-world usage where examples aren’t available.

- Time and Resource Intensive: Preparing and processing multiple examples increases evaluation overhead.

- Example Dependency: Results can be highly dependent on the quality and relevance of chosen examples.

Use Cases for Multi Shot Evaluation

- Complex Task Assessment: Evaluating performance on tasks that require specific formatting or style.

- Domain Adaptation: Testing how well models can adapt to specialized domains with minimal context.

- Creativity and Generation: Assessing the model’s ability to continue patterns or styles from given examples.

- Reasoning and Inference: Evaluating more complex logical or analytical capabilities.

- Dialogue Systems: Testing conversational abilities by providing example interactions.

Comparative Analysis: Single Shot vs Multi Shot

Performance Metrics

When comparing single shot and multi shot evaluations, several metrics are commonly used:

- Accuracy: The percentage of correct responses.

- Perplexity: A measure of how well the model predicts a sample (lower is better).

- BLEU Score: For translation tasks, measuring the quality of machine-translated text.

- F1 Score: Balancing precision and recall, especially useful for classification tasks.

- Human Evaluation Scores: Subjective ratings by human judges on various aspects of model output.

Research has shown that multi shot evaluations generally lead to higher performance across these metrics, but the magnitude of improvement varies based on the task and model.

Task Complexity Considerations

The choice between single shot and multi shot evaluation often depends on task complexity:

- Simple Tasks: For basic operations like simple math or straightforward translations, single shot evaluation may be sufficient and more efficient.

- Complex Tasks: Tasks involving reasoning, creativity, or specialized knowledge often benefit from multi shot evaluation to clarify expectations and context.

Model Capability Factors

Different models may perform differently under single shot vs multi shot conditions:

- Advanced Models: State-of-the-art LLMs like GPT-4 or PaLM may perform well in single shot scenarios due to their vast knowledge and strong generalization abilities.

- Specialized Models: Domain-specific models might benefit more from multi shot evaluation, especially when assessing performance outside their primary training domain.

Real-world Application Alignment

Choosing between single shot and multi shot evaluation should consider the intended real-world application:

- If the model will be used in a context where examples are readily available (e.g., a customer service chatbot with access to previous interactions), multi shot evaluation may be more representative.

- For applications requiring on-the-fly responses to novel queries (e.g., open-ended question answering), single shot evaluation might better reflect actual use.

Advanced Evaluation Techniques

Beyond basic single shot and multi shot paradigms, several advanced techniques have emerged:

Chain-of-Thought Prompting

This technique involves prompting the model to explain its reasoning step-by-step, which can be applied in both single shot and multi shot contexts. It’s particularly useful for assessing the model’s problem-solving abilities and identifying potential logical flaws.

Dynamic Few-Shot Learning

Instead of using a fixed set of examples, this approach dynamically selects the most relevant examples from a larger pool based on the input query. This can lead to more accurate and context-appropriate evaluations.

Adversarial Testing

Creating intentionally challenging or edge-case prompts to stress-test the model’s capabilities and identify potential weaknesses or biases.

Cross-Task Generalization

Evaluating how well skills learned in one domain transfer to related but distinct tasks, which can be particularly insightful when comparing single shot and multi shot performance.

Ethical Considerations in LLM Evaluation

Regardless of the evaluation technique chosen, ethical considerations should be at the forefront:

- Bias Detection: Both single shot and multi shot evaluations should include tests for various forms of bias (gender, racial, cultural, etc.).

- Fairness: Ensure that evaluation datasets and examples represent diverse perspectives and experiences.

- Safety: Include prompts that test the model’s ability to handle potentially harmful or inappropriate requests safely.

- Transparency: Clearly document all aspects of the evaluation process, including example selection criteria for multi shot tests.

Best Practices for LLM Evaluation

To ensure robust and meaningful evaluations, consider these best practices:

- Diverse Test Sets: Use a wide range of tasks and prompts to get a comprehensive view of model performance.

- Consistent Metrics: Employ standardized evaluation metrics to enable meaningful comparisons across models and evaluation techniques.

- Human Evaluation: Incorporate human judgment, especially for tasks involving creativity, nuance, or ethical considerations.

- Iterative Testing: Regularly reassess models as they are fine-tuned or as new versions are released.

- Contextual Reporting: Always report results in the context of the evaluation method used (single shot vs multi shot).

- Error Analysis: Go beyond aggregate metrics to analyze specific failure cases and patterns.

- Reproducibility: Ensure that evaluation procedures and datasets are well-documented and, ideally, publicly available for verification.

The Future of LLM Evaluation

As LLM technology continues to advance, evaluation techniques are likely to evolve as well. Some potential future directions include:

- Hybrid Evaluation Frameworks: Combining aspects of both single shot and multi shot paradigms to provide more comprehensive assessments.

- Automated Evaluation Generation: Using AI to generate diverse and challenging evaluation tasks dynamically.

- Continuous Learning Assessment: Evaluating models’ ability to learn and adapt over time, rather than just assessing static performance.

- Multimodal Evaluation: As models incorporate multiple modalities (text, image, audio), evaluation techniques will need to adapt to assess these integrated capabilities.

- Ethical AI Alignment: Increased focus on evaluating models’ alignment with human values and ethical principles.

Conclusion

The choice between single shot and multi shot evaluation techniques is not a matter of one being universally superior to the other. Rather, it’s about selecting the most appropriate method for the specific context, task, and goals of the evaluation. By understanding the nuances of each approach, researchers and practitioners can design more effective evaluation protocols, leading to more accurate assessments of LLM capabilities and ultimately to the development of more robust and reliable AI systems.

As the field of AI continues to evolve at a rapid pace, so too must our evaluation methodologies. By combining rigorous testing, ethical consideration, and a nuanced understanding of different evaluation paradigms, we can ensure that our assessments of LLMs remain meaningful, accurate, and aligned with the ultimate goal of creating AI systems that are both powerful and beneficial to humanity.