Linear Regression: Simplifying Data Predictions

- Introduction to Linear Regression

- The Concept of Regression Analysis

- Types of Regression Models

- Simple Linear Regression vs. Multiple Linear Regression

- Assumptions of Linear Regression

- Training a Linear Regression Model

- Evaluating Model Performance

- Making Predictions

- Real-World Applications

- Conclusion

Introduction to Linear Regression

Linear regression is one of the most popular and commonly used machine learning algorithms. It is a statistical method used to predict continuous, numeric values based on historical data. Regression analysis involves modelling the relationship between a dependent variable (also called the target or response variable) and one or more independent variables (also called predictors or features).

The Concept of Regression Analysis

Regression analysis originated with Francis Galton’s work in the late 1800s studying the heights of parents and their children. Galton observed that extreme characteristics of parents were not completely passed on to offspring, but rather the characteristics of children regressed towards a mediocre value. This phenomenon came to be known as “regression toward the mean” and gave rise to the term “regression analysis” (reference 1).

In linear regression, the model assumes a linear relationship between the input variables (x) and the singular output variable (y). The linear equation assigns one scale factor to each input value or column, called a coefficient and represented by the capital Greek letter Beta (B). The coefficient determines the strength of the correlation between the predictor and the response. These coefficients are estimated from the data during model training.

Types of Regression Models

There are various types of regression models in machine learning:

- Simple Linear Regression: Used when there is a single predictor variable and a single response variable. It establishes a linear relationship between the two using a straight line (y = mx + b).

- Multiple Linear Regression: Used when there are multiple predictor variables influencing a single response variable. It finds a linear relationship between the response and a subset or all of the available predictors.

- Polynomial Regression: Used when the relationship between independent and dependent variables appears curved or nonlinear. Polynomial terms are added to linear regression to account for nonlinearity.

- Ridge Regression: Used to address multicollinearity (high correlations among predictor variables) which can cause overfitting. It adds a regularization term to the regression model to reduce model complexity and avoid overfitting.

- Lasso Regression: Similar to ridge regression. It uses an L1 regularization technique to enhance the prediction accuracy and interpretability of the statistical model it produces.

- Elastic Net Regression: Combines both L1 and L2 regularization of ridge and lasso regression. This allows for learning a sparse model where few of the weights are non-zero like lasso, while still maintaining the regularization properties of ridge.

Simple Linear Regression vs. Multiple Linear Regression

Simple linear regression contains one independent variable and one dependent variable. It estimates how the value of the dependent variable changes when any one of the independent variables is varied and the other independent variables are held fixed. For example, predicting salary based on years of experience.

Multiple linear regression contains two or more independent variables and a single dependent variable. It estimates how the value of the dependent variable changes when any one of the independent variables is varied while the other independent variables are held fixed. For example, predicting salary based on years of experience, level of education, location, and job role.

Assumptions of Linear Regression

There are several assumptions that need to be met in order for linear regression models to work reliably:

- Linear relationship – There should be a linear and additive relationship between the dependent and independent variables. The independent variables should be linearly related to the dependent variable.

- Multivariate normality – The error terms or residuals of the data are normally distributed.

- Minimal multicollinearity – Multicollinearity exists when two or more of the independent variables are highly correlated with each other. There should be minimal correlation between independent variables in the model.

- Homoscedasticity – The error variance should be constant across the values of the independent variables. The size of the error terms should stay consistent across all values of the independent variable.

- Independence of errors – The error terms of each observation must be independent of each other. Residuals should not be correlated.

Training a Linear Regression Model

Linear regression models can be easily trained using sklearn in Python. The steps are:

- Import linear model class (LinearRegression()) and other necessary libraries like pandas, numpy.

- Load and prepare the training dataset.

- Define the independent and dependent features.

- Split the data into training and test sets.

- Instantiate the LinearRegression() model and fit it to the training data.

- Evaluate the model’s accuracy on the test set using model.score() or other evaluation metrics like R-squared, Mean Absolute Error etc.

- Use the model to make predictions on new data using model.predict().

Evaluating Model Performance

To evaluate linear regression model performance, these are some of the key metrics:

- R-squared: Represents how much variance in the dependent variable is explained by the model. Higher is better, max value is 1.

- Mean Absolute Error: Average of absolute differences between predicted and actual values. Lower is better.

- Mean Squared Error: Similar to MAE but squares the differences before summing them. More sensitive to outliers. Lower is better.

- Root Mean Squared Error: Square root of the MSE. Easier to interpret due to being in same units as the dependent variable. Lower is better.

Making Predictions

Once the model is trained, we can use it to make predictions on new data:

- Take new input data and format it the same way as the training data

- Use model.predict(new_data) to generate predicted target variable values.

- The output is the predicted values corresponding to the new inputs based on patterns learned from the training data.

Real-World Applications

Some common real-world uses of linear regression:

- Predicting housing prices based on features like square footage, location, number of rooms, etc.

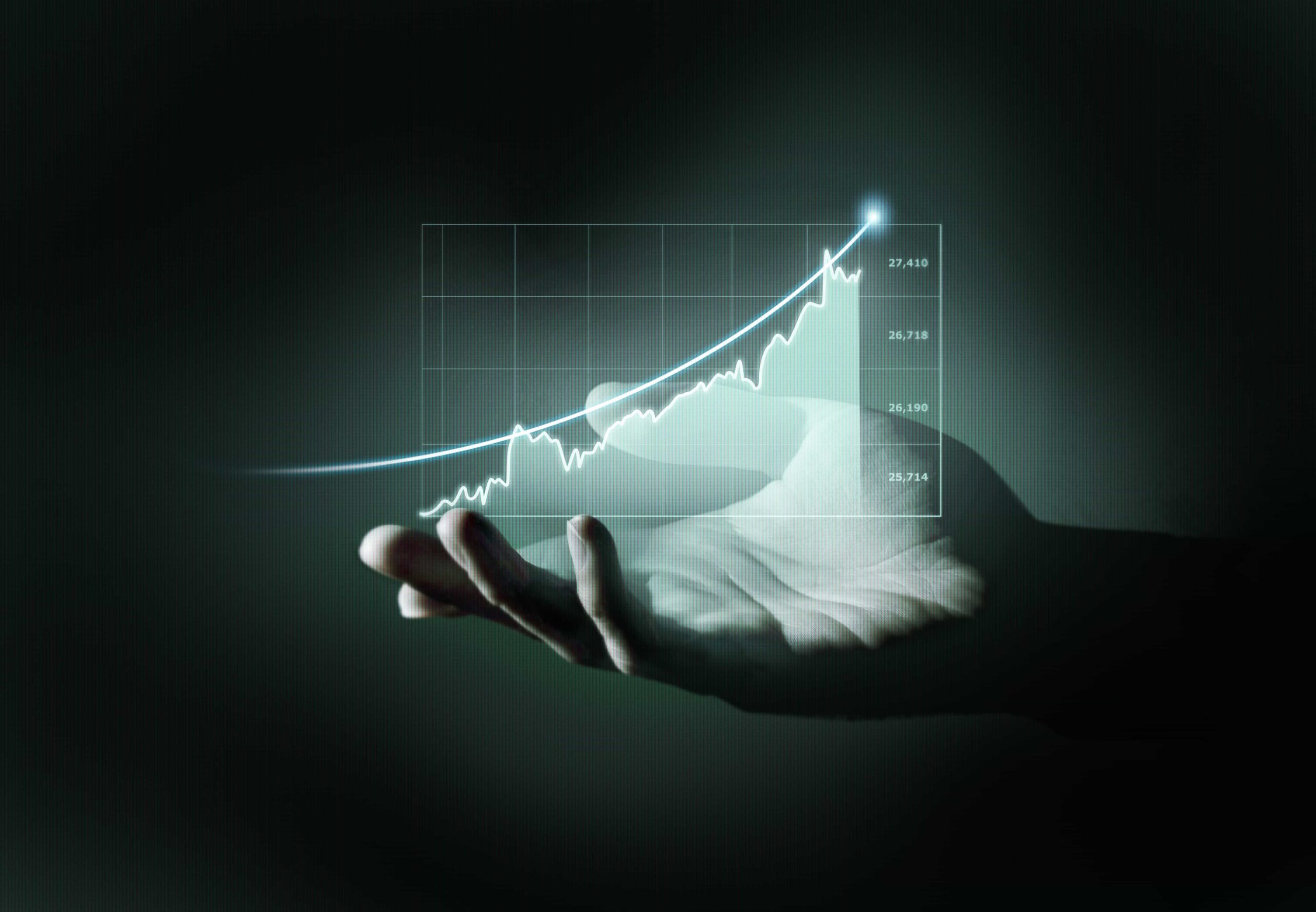

- Forecasting financial trends like stock prices, commodity prices based on historical price movements.

- Estimating the effect of interest rates, inflation, unemployment on GDP growth rates.

- Predicting the sale of a product based on advertising budgets, competition, pricing, discounts offered etc.

- Estimating the risk of insurance policies based on factors like age, health conditions, lifestyle indicators like smoking, alcohol usage etc.

Conclusion

In summary, linear regression is a fundamental machine learning algorithm for predictive analysis. When the relationship between the variables is approximately linear, linear regression serves as a simple yet powerful approach. With some key assumptions and suitable evaluation metrics, linear regression models can deliver useful and interpretable predictive capabilities for business and scientific applications.