Microsoft Unveils Phi-2: A Breakthrough in AI Language Models

In a remarkable advancement for artificial intelligence, Microsoft has unveiled Phi-2, a cutting-edge language model equipped with 2.7 billion parameters. This model not only stands as a testament to Microsoft’s commitment to AI development but also sets a new benchmark in the efficiency and capabilities of compact language models.

Phi-2: Building on a Legacy of Excellence

Phi-2 is the latest in Microsoft’s series of language models, following the successful Phi-1 and Phi-1.5. It’s not just another incremental upgrade; Phi-2 has the distinction of matching, and in some instances, surpassing models that are up to 25 times its size. This achievement is attributed to Microsoft’s focused approach towards model scaling and selective training data curation.

Key to Success: Quality Training Data and Innovative Scaling

At the core of Phi-2’s success are two pivotal factors. First, the emphasis on high-quality training data – Microsoft has utilized “textbook-quality” data, supplemented with web data that’s been meticulously chosen for its educational value and content quality. Second, innovative scaling techniques that built upon the 1.3 billion-parameter Phi-1.5 have been instrumental in enhancing Phi-2’s performance significantly.

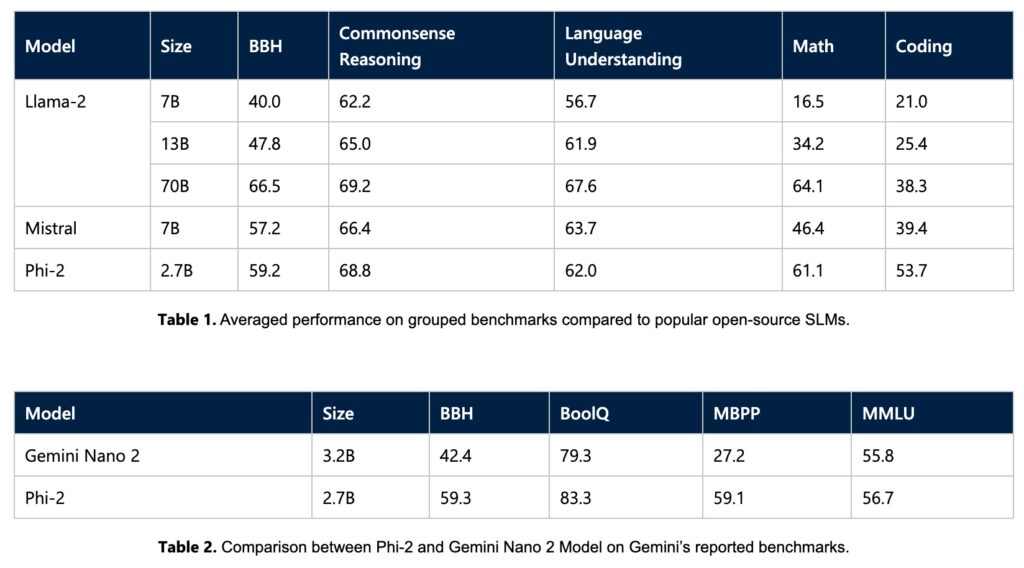

Benchmarking a Giant Slayer

Despite its relatively modest size, Phi-2 has shown remarkable results in a variety of benchmarks, including Big Bench Hard, commonsense reasoning, and language understanding. It stands toe-to-toe with, and even outperforms, larger counterparts like Mistral and Llama-2, as well as Google’s Gemini Nano 2.

Real-World Prowess

Phi-2’s capabilities extend beyond benchmarks. It has demonstrated exceptional skills in solving complex physics problems and correcting student errors, indicating its potential in educational and research applications.

Technical Underpinnings

This Transformer-based model, with its next-word prediction objective, was trained on an expansive 1.4 trillion tokens from synthetic and web datasets. The training, conducted over 14 days using 96 A100 GPUs, focused on maintaining high safety standards, claiming to surpass open-source models in reducing toxicity and bias.

Continuing the Quest for AI Excellence

With Phi-2, Microsoft solidifies its position as a leader in AI innovation, particularly in the realm of language models. This development not only demonstrates the potential of smaller base models but also opens new avenues for research and application in AI.