Role of GPUs in Training Large Language Models

- The Rise of Large Language Models

- Why GPUs are Essential for LLM Training

- The LLM Training Process

- Types of GPUs Used for LLM Training

- Hardware Platforms for LLM Training

- Software Frameworks and Libraries

- Optimizing Communication in LLM Training

- Measuring LLM Training Efficiency

- Challenges and Limitations

- Parallelism Strategies for LLM Training

- Future Trends

- Alternative Hardware Platforms

- Open-Source LLMs

- Evaluating LLM Performance

- Security in LLM Training

- Cost Optimization in LLM Deployment

- Case Studies

- Scaling LLM Training

- LLM Research and Development

- Hardware Considerations for LLM Training

- Conclusion

- Works cited

The Rise of Large Language Models

LLMs are sophisticated AI systems that learn to generate human language by training on vast datasets containing billions of words and phrases 2. This training process allows them to master the nuances and structure of language, enabling them to perform tasks such as translation, sentiment analysis, chatbot interaction, and content generation 2. Some of the most popular LLMs include the Generative Pre-trained Transformer (GPT) models, such as BERT and GPT-3 2.

The training of these models involves complex mathematical operations and the processing of massive amounts of data, presenting a significant computational challenge 2. This is where GPUs come into play.

Why GPUs are Essential for LLM Training

GPUs are specifically designed for parallel processing, making them ideal for handling the computationally intensive tasks involved in LLM training 2. Unlike Central Processing Units (CPUs), which excel at handling sequential tasks one instruction at a time, GPUs can tackle thousands of tasks simultaneously 2. This parallel processing capability allows GPUs to efficiently process the many components involved in understanding and generating human language 2.

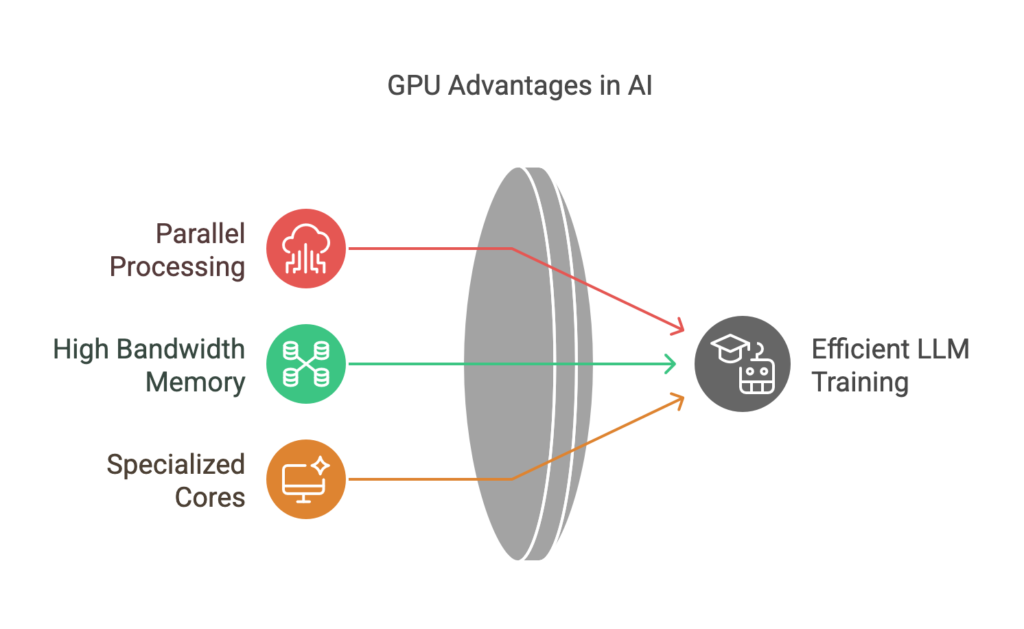

GPUs offer several key advantages for LLM training 5:

- Parallel Processing: GPUs excel at handling the parallel nature of neural network operations, enabling them to process vast amounts of data concurrently.

- High Bandwidth Memory: GPUs can quickly access and process large datasets, crucial for handling the extensive parameters of LLMs.

- Specialized Cores: Modern GPUs include specialized cores like Tensor Cores, which are purpose-built for accelerating AI workloads, including the matrix multiplications that form the backbone of LLM computations 6.

Furthermore, GPUs offer high computational throughput, which is necessary for the complex mathematical operations involved in neural network training 2. They are particularly adept at matrix multiplication, a fundamental operation in deep learning 2. Not only are GPUs faster than CPUs for LLM training, but they are also more cost-effective in the long run due to reduced training time and energy consumption 2.

The LLM Training Process

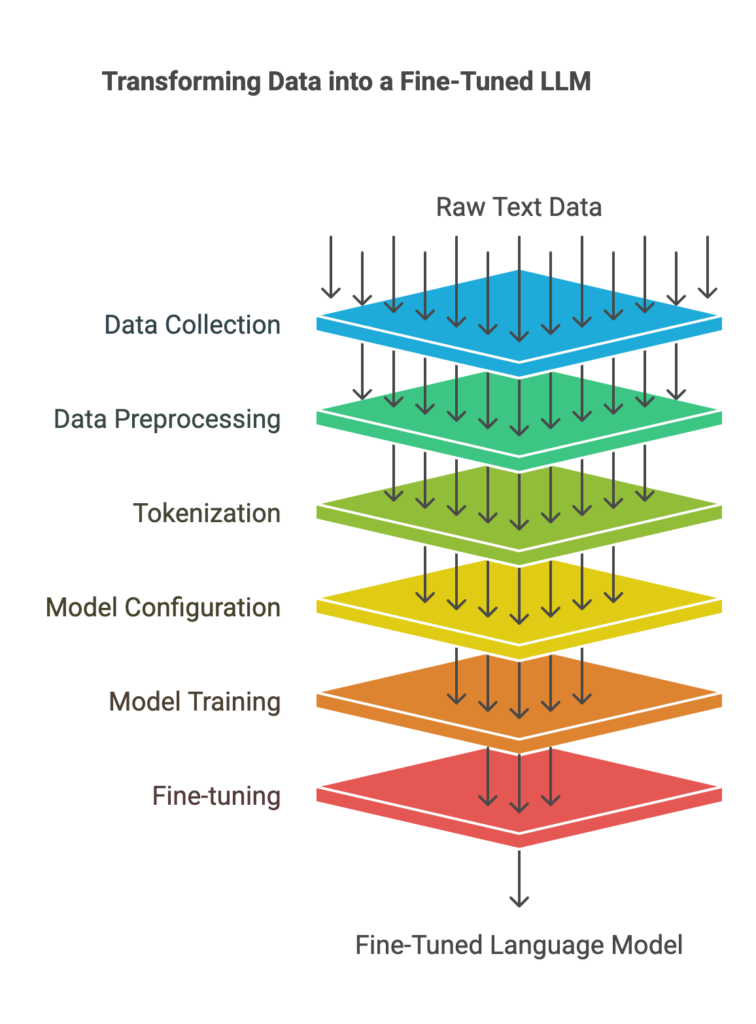

Training an LLM is a multi-stage process that involves careful data preparation, model configuration, and iterative refinement 3:

- Data Collection and Preprocessing: The first step involves gathering vast amounts of text data from various sources, such as books, articles, and code repositories. This data is then cleaned and preprocessed to remove noise, inconsistencies, and irrelevant information.

- Tokenization: The preprocessed text is then broken down into smaller units called tokens, which can be words, subwords, or even characters. This tokenization process allows the model to represent and process language efficiently.

- Model Configuration: A transformer-based neural network architecture is typically used for LLMs due to its effectiveness in natural language processing tasks. This architecture involves configuring various parameters, such as the number of layers, attention heads, and hidden units.

- Model Training: The model is trained on the prepared data, learning to predict the next token in a sequence given the preceding tokens. This process involves numerous iterations and adjustments to the model’s internal weights to improve its predictive accuracy.

- Fine-tuning: After initial training, the model is fine-tuned using techniques like Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). SFT involves training the model on human-labeled data to align its responses with human expectations. RLHF further refines the model by using human feedback to reward desirable behaviors and discourage undesirable ones.

Types of GPUs Used for LLM Training

The increasing demands of LLMs and AI have driven rapid advancements in GPU technology, leading to the development of sophisticated language models 2. These advancements include:

- Increased Memory Capacity: Larger models require more memory to process massive datasets. Modern GPUs offer substantial memory capacity, allowing researchers to build and train larger LLMs 2. However, it’s important to note that memory capacity is not the only factor; memory bandwidth and interconnect speed also play crucial roles in training large LLMs.

- Faster Processing Speeds: GPUs with faster processing speeds significantly reduce training time, accelerating research and development 2.

- Specialized Hardware: NVIDIA’s Tensor Core technology accelerates matrix computations, a core operation in deep learning, leading to faster and more efficient LLM training 2.

When choosing a GPU for LLM training, several factors need to be considered 8:

- Model Size: Larger models with more parameters require GPUs with higher memory capacity and computational power.

- Precision: The numerical precision used for representing model parameters (e.g., FP32, FP16, INT8) affects memory requirements and computational efficiency.

- Batch Size: The number of training examples processed simultaneously impacts memory usage and training speed.

Some of the leading NVIDIA GPUs designed for LLM training include:

| GPU Name | CUDA Cores | Memory Capacity | Memory Bandwidth | TDP |

|---|---|---|---|---|

| NVIDIA H100 | 18,432 | 80GB HBM3 | 3 TB/s | 350W (SXM) / 700W (SXM5) |

| NVIDIA A100 | 6,912 | 40GB/80GB HBM2e | 1.6 TB/s (40GB) / 2 TB/s (80GB) | 250W (PCIe) / 400W (SXM) |

| NVIDIA L40 | 18,176 | 48GB GDDR6 | 864 GB/s | 300W |

| NVIDIA RTX 4090 | 16,384 | 24GB GDDR6X | 1008 GB/s | 450W |

| NVIDIA T4 | 2,560 | 16GB GDDR6 | 320 GB/s | 70W |

In addition to NVIDIA, AMD also offers GPUs suitable for LLM training, such as the AMD Radeon Pro W7900 9. This GPU features the latest RDNA3 architecture with advanced vector processing capabilities and high-bandwidth memory operations, making it well-suited for handling the computational demands of LLM training.

Hardware Platforms for LLM Training

While individual GPUs provide significant computational power, training large LLMs often requires scaling beyond a single device. This is where specialized hardware platforms come into play:

- NVIDIA DGX Systems: NVIDIA DGX systems are full-stack solutions designed for enterprise-grade machine learning 10. These systems integrate high-performance NVIDIA GPUs with optimized software and networking to provide a powerful and scalable platform for LLM training.

Software Frameworks and Libraries

Several software frameworks and libraries are crucial for training LLMs on GPUs. These frameworks provide the necessary tools and functionalities for building, training, and deploying these models efficiently. When choosing a machine learning framework, several factors need to be considered 11:

- Type of Model: Different frameworks may be better suited for different types of machine learning models, such as classification, regression, or neural networks.

- Training Method: The chosen framework should support the desired training method, such as supervised learning, unsupervised learning, or reinforcement learning.

- Targeted Hardware: The framework should be compatible with the available hardware, including CPUs, GPUs, and other accelerators.

Some of the key frameworks and libraries include:

- PyTorch: A popular open-source machine learning framework that provides a flexible and efficient platform for LLM training 12.

- Megatron-DeepSpeed: A powerful library that combines the strengths of Megatron-LM and DeepSpeed, enabling efficient distributed training of large models 13.

- Transformers: An open-source library that provides general-purpose architectures like BERT, ROBERTA, and GPT-2 for Natural Language Understanding (NLU) and Natural Language Generation (NLG) 14.

- DeepSpeed: A deep learning optimization library that makes distributed training and inference easy and efficient 14.

- Colossal-AI: A library that provides various tools for efficiently distributing training workloads and optimizing heterogeneous memory management 14.

- NVIDIA NeMo: An end-to-end, cloud-native framework for building, customizing, and deploying generative AI models 15.

- Fast-LLM: An open-source library designed for fast, scalable, and cost-efficient LLM training on GPUs 16.

- LLMTools: A user-friendly library for running and fine-tuning LLMs in low-resource settings 17.

- Llama Index: A data framework for integrating LLMs with various data sources, particularly for retrieval-augmented generation (RAG) applications 18.

These frameworks and libraries often work in conjunction with programming languages like Python and C++ to facilitate the development and training of LLMs 13.

Optimizing Communication in LLM Training

Efficient communication between GPUs is crucial for achieving high performance in LLM training, especially when scaling to large clusters. One technique for optimizing communication is compression-assisted MPI collectives 19. MPI (Message Passing Interface) is a standard for inter-process communication commonly used in distributed computing. By compressing the data exchanged between GPUs during MPI collectives, the communication overhead can be significantly reduced, leading to improved training efficiency.

Measuring LLM Training Efficiency

While fitting LLMs on a single GPU is essential, it’s important to consider the concept of “GPU Hours” as a more comprehensive measure of efficiency 20. GPU Hours represent the total amount of time spent training the model on a GPU, taking into account both the model’s size and the training duration. This metric helps to evaluate the overall cost and resource utilization of LLM training.

Challenges and Limitations

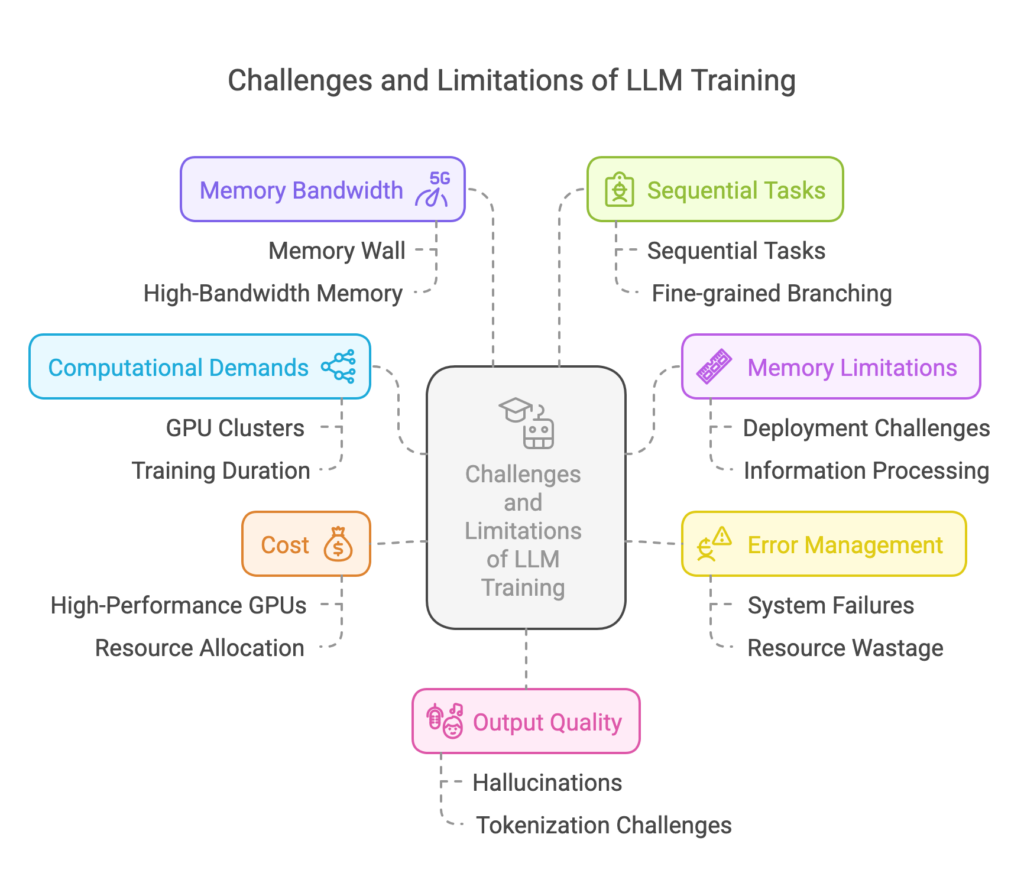

While GPUs have significantly accelerated LLM training, there are challenges and limitations associated with their use:

- High Computational Demands: Training LLMs requires immense computational power, necessitating extensive GPU clusters 21. For example, training GPT-3 with its 175 billion parameters would take 288 years on a single NVIDIA V100 GPU 21.

- Memory Limitations: LLMs demand substantial memory due to their processing of vast amounts of information 22. This can pose difficulties, particularly when attempting to deploy them on memory-constrained devices 22.

- Error Management and System Reliability: Large-scale training risks errors and system failures 21. Without effective error recovery, training can be interrupted, wasting resources and increasing costs 21. Meta, for example, encountered various hardware failures during their LLM training, including GPUs falling off the PCIe bus, uncorrectable errors in DRAM and SRAM, and network cable failures 23.

- Cost: Training LLMs requires significant financial investments, particularly in high-performance GPUs 21. Inefficient resource allocation can lead to skyrocketing costs 21. The cost of training a single LLM can range from tens of thousands to millions of dollars, depending on the model size and the amount of data used 24.

- Memory Bandwidth Bottlenecks: Memory bandwidth can limit training performance, even with high-bandwidth memory stacks like HBM 25. This “memory wall” challenge arises because memory systems haven’t kept pace with the rapid increase in GPU computational power.

- Sequential Tasks and Fine-grained Branching: GPUs are not well-suited for all types of problems. Sequential tasks, where each step depends on the previous one, and fine-grained branching, where the code execution path varies significantly between threads, can limit GPU performance 26.

- Overhead for Smaller Networks: For smaller neural networks, the overhead of setting up computation on the GPU may outweigh the speedup gained from parallel processing 27. It’s important to benchmark CPU vs. GPU performance to determine the optimal hardware for a given task.

- Limited Bandwidth of Integrated GPUs: Integrated GPUs, which share system memory with the CPU, often have limited memory bandwidth, which can hinder LLM training 28.

- Output Quality and Hallucinations: LLMs can sometimes generate outputs that are factually incorrect or incoherent, referred to as “hallucinations” 29. These hallucinations can be problematic in applications where accuracy and reliability are critical.

- Tokenization Challenges: Tokenizers, which break down text into smaller units, can introduce challenges such as computational overhead, language dependence, and information loss 30.

Parallelism Strategies for LLM Training

To overcome the limitations of training large LLMs on a single GPU, various parallelism strategies are employed 8:

- Data Parallelism: In data parallelism, the same model is replicated on multiple GPUs, and each GPU processes a different portion of the training data. This allows for faster training by distributing the workload.

- Model Parallelism: In model parallelism, different parts of the model are assigned to different GPUs. This is necessary when the model is too large to fit on a single GPU. Naive model parallelism can be inefficient due to idle time and communication overhead. Techniques like GPipe address these limitations by optimizing the partitioning of the model and the flow of data between GPUs.

- Pipeline Parallelism: In pipeline parallelism, the model is divided into stages, and each stage is assigned to a different GPU. This allows for concurrent execution of different parts of the model, further improving training speed.

These parallelism techniques are often used in combination to maximize training efficiency and scalability.

Future Trends

The future of GPU technology for LLM training promises continued advancements and innovations:

- AI-Specific Hardware: GPUs are evolving to include more AI-specific hardware, such as dedicated inference accelerators and GPUs with multiple Tensor Cores 32. This trend is driven by the increasing demand for specialized hardware that can efficiently handle the unique computational requirements of AI workloads, including LLMs.

- Heterogeneous Architectures: The future will see increased integration of CPUs, AI accelerators, and FPGAs (Field-Programmable Gate Arrays) with GPUs 32. This integration will enable more flexible and efficient computing systems that can adapt to a wider range of AI tasks.

- Unified Memory and Chiplet Design: Unified memory architectures and chiplet designs will enable more efficient and scalable GPUs 32. Unified memory allows CPUs and GPUs to share the same memory space, reducing data transfer overhead. Chiplet designs allow for more modular and customizable GPUs, enabling the development of specialized hardware for specific AI workloads.

- Addressing the Memory Wall: Innovations like Tensor Cores and unified memory architectures are being developed to address the “memory wall” challenge, which limits the performance of LLMs due to memory bandwidth bottlenecks 25.

Alternative Hardware Platforms

While GPUs are currently the dominant hardware platform for LLM training, alternative platforms are emerging:

- TPUs (Tensor Processing Units): Google’s TPUs are specifically designed for machine learning workloads and offer high performance for LLM training.

- FPGAs (Field-Programmable Gate Arrays): FPGAs offer flexibility and customization, allowing for the development of hardware tailored to specific LLM training needs.

- CPUs with Specialized Instructions: CPUs are evolving to include specialized instructions that accelerate AI workloads, potentially making them more competitive with GPUs for LLM training.

However, these alternatives currently lag behind GPUs in terms of overall performance and maturity for LLM training 32.

Open-Source LLMs

The development of open-source LLMs is democratizing access to this powerful technology 34:

- LLaMA 3.1: Meta’s LLaMA 3.1 is a series of open-source LLMs that offer high performance and support for a wide range of NLP tasks. The latest version includes models with up to 405 billion parameters and an increased context length of 128,000 tokens, enabling improved performance on complex reasoning tasks.

- GPT-NeoX-20B: Developed by EleutherAI, GPT-NeoX-20B is a 20 billion parameter LLM that has shown strong performance on various language understanding and knowledge-based tasks.

Evaluating LLM Performance

Evaluating the performance of LLMs is crucial for ensuring their accuracy, reliability, and safety. DeepEval is an open-source framework that provides tools and metrics for evaluating LLM applications 36. It offers various metrics to assess aspects such as answer relevancy, factual accuracy, and bias detection.

Security in LLM Training

As LLMs become more prevalent, ensuring the security of their training process is paramount. Research is ongoing to develop secure distributed LLM training frameworks that protect model parameters and data from malicious actors 37.

Cost Optimization in LLM Deployment

The cost of deploying and running LLMs can be significant. FrugalGPT is an approach that aims to reduce these costs by strategically selecting the most appropriate and cost-effective model for a given task 38.

Case Studies

Several successful case studies demonstrate the effectiveness of GPUs in LLM training:

- MegaScale: A production system developed by Meta for training LLMs at the scale of more than 10,000 GPUs, achieving high training efficiency and stability 39. This case study highlights the challenges of scaling LLM training to such a large scale and the importance of factors such as hardware reliability, fast recovery on failure, efficient preservation of the training state, and optimal connectivity between GPUs.

- Perplexity: An API tool powered by NVIDIA GPUs and optimized for fast LLM inference with NVIDIA TensorRT-LLM 40. This case study demonstrates how GPUs can be used to accelerate LLM inference, enabling real-time applications and reducing costs.

- LILT: A generative AI platform that leverages NVIDIA GPUs and NVIDIA NeMo for faster translation of time-sensitive information at scale 41. This case study showcases the use of GPUs in a real-world application, highlighting the benefits of increased throughput and scalability.

Scaling LLM Training

Scaling LLM training to thousands of GPUs presents unique challenges 23. As model size and data volume increase, ensuring efficient communication between GPUs and maintaining high training stability become critical. Techniques such as compression-assisted MPI collectives and optimized parallelism strategies are essential for achieving optimal performance and scalability.

LLM Research and Development

The field of LLM research and development is rapidly evolving, with new models and techniques emerging constantly 44. Milestone papers have introduced innovations such as the transformer architecture, scaling laws for neural language models, and techniques for improving model efficiency and generalization.

Hardware Considerations for LLM Training

When setting up hardware for LLM training, several considerations and best practices should be kept in mind 45:

- Budget: LLM training requires high-performance hardware, which can be expensive. Prioritize components based on budget and the scale of training planned.

- Future-Proofing: Aim for hardware that can handle future LLMs, as model sizes and complexity continue to increase.

- Cloud vs. On-Premises: Consider whether to build an on-premises setup or utilize cloud services, which offer flexibility and scalability.

- Optimization: Efficient code and model optimization techniques can reduce hardware requirements and training time.

- Monitoring and Maintenance: Regularly monitor hardware to detect issues early and perform routine maintenance.

Conclusion

GPUs have become indispensable for training large language models, enabling the development of sophisticated AI systems that can understand and generate human-like text. The advancements in GPU technology, coupled with innovative software frameworks and libraries, have significantly accelerated LLM training and paved the way for new possibilities in artificial intelligence. While challenges and limitations remain, ongoing research and development efforts continue to push the boundaries of GPU capabilities, promising even more powerful and efficient LLM training in the future.

One of the key challenges in the field of LLMs is the shortage of skilled professionals who can effectively develop, train, and deploy these complex models 22. This talent shortage has significant implications for the future of LLMs, potentially hindering their adoption and limiting their full potential. Addressing this challenge through education, training, and collaboration will be crucial for the continued advancement of LLMs and their successful integration into various applications.

Works cited

1. Challenges of Training Large Language Models: An In-depth Look – Shiksha Online, accessed February 9, 2025, https://www.shiksha.com/online-courses/articles/challenges-of-training-large-language-models-an-in-depth-look/

2. Unleashing the Potential of GPUs for Training LLMs – Packt, accessed February 9, 2025, https://www.packtpub.com/en-us/learning/how-to-tutorials/unleashing-the-potential-of-gpus-for-training-llms

3. Overcoming LLM Training Challenges: Strategies for Business Success – Turing, accessed February 9, 2025, https://www.turing.com/resources/llm-training-challenges

4. What is a GPU & Its Importance for AI | Google Cloud, accessed February 9, 2025, https://cloud.google.com/discover/gpu-for-ai

5. GPU for Machine Learning & AI in 2025: On-Premises vs Cloud – MobiDev, accessed February 9, 2025, https://mobidev.biz/blog/gpu-machine-learning-on-premises-vs-cloud

6. Top NVIDIA GPUs for LLM Inference | by Bijit Ghosh | Medium, accessed February 9, 2025, https://medium.com/@bijit211987/top-nvidia-gpus-for-llm-inference-8a5316184a10

7. Accelerating Large Language Models: The H100 GPU’s Role in Advanced AI Development, accessed February 9, 2025, https://blog.paperspace.com/h100-deep-learning-frameworks-compatibility/

8. How to Choose the Best GPU for LLM: A Practical Guide, accessed February 9, 2025, https://www.hyperstack.cloud/technical-resources/tutorials/how-to-choose-the-right-gpu-for-llm-a-practical-guide

9. Training Large Language Models with an AMD GPU | by Raajas Sode | Jan, 2025 – Medium, accessed February 9, 2025, https://medium.com/@RaajasSode/training-large-language-models-with-an-amd-gpu-85e53616b20c

10. Best GPU for Deep Learning: Considerations for Large-Scale AI, accessed February 9, 2025, https://www.run.ai/guides/gpu-deep-learning/best-gpu-for-deep-learning

11. Machine and Deep Learning Frameworks – HPC Wiki, accessed February 9, 2025, https://hpc-wiki.info/hpc/Machine_and_Deep_Learning_Frameworks

12. lumi-supercomputer.eu, accessed February 9, 2025, https://lumi-supercomputer.eu/scaling-the-pre-training-of-large-language-models-of-100b-parameters-to-thousands-of-amd-mi250x-gpus-on-lumi/#:~:text=PyTorch%20is%20the%20most%20common,working%20out%20of%20the%20box.

13. Scaling the pre-training of large language models of >100B …, accessed February 9, 2025, https://lumi-supercomputer.eu/scaling-the-pre-training-of-large-language-models-of-100b-parameters-to-thousands-of-amd-mi250x-gpus-on-lumi/

14. Key Libraries for Developing Large Language Models (LLMs) | by Sirjanabhatta | Medium, accessed February 9, 2025, https://medium.com/@sirjanabhatta6/key-libraries-for-developing-large-language-models-llms-60a740906bd6

15. Large Language Models — NVIDIA NeMo Framework User Guide, accessed February 9, 2025, https://docs.nvidia.com/nemo-framework/user-guide/latest/llms/index.html

16. Train Large Language Models Faster Than Ever Before – Fast-LLM, accessed February 9, 2025, https://servicenow.github.io/Fast-LLM/

17. kuleshov-group/llmtools: Finetuning Large Language Models on One Consumer GPU in 2 Bits – GitHub, accessed February 9, 2025, https://github.com/kuleshov-group/llmtools

18. 7 Large Language Model Frameworks to Improve Productivity – ODSC – Open Data Science, accessed February 9, 2025, https://odsc.medium.com/7-large-language-model-frameworks-to-improve-productivity-4b85bdbc151d

19. Accelerating Large Language Model Training with Hybrid GPU-based Compression – arXiv, accessed February 9, 2025, https://arxiv.org/html/2409.02423v1

20. Large Language Models: How to Run LLMs on a Single GPU – Hyperight, accessed February 9, 2025, https://hyperight.com/large-language-models-how-to-run-llms-on-a-single-gpu/

21. 10 Challenges and Solutions for Training Foundation LLMs, accessed February 9, 2025, https://www.hyperstack.cloud/blog/case-study/challenges-and-solutions-for-training-foundation-llms

22. The Unspoken Challenges of Large Language Models – Deeper Insights, accessed February 9, 2025, https://deeperinsights.com/ai-blog/the-unspoken-challenges-of-large-language-models

23. How Meta trains large language models at scale, accessed February 9, 2025, https://engineering.fb.com/2024/06/12/data-infrastructure/training-large-language-models-at-scale-meta/

24. The Costs and Complexities of Training Large Language Models – Deeper Insights, accessed February 9, 2025, https://deeperinsights.com/ai-blog/the-costs-and-complexities-of-training-large-language-models

25. The role of GPU memory for training large language models – Oracle Blogs, accessed February 9, 2025, https://blogs.oracle.com/cloud-infrastructure/post/role-gpu-memory-training-large-language-models

26. What problems fit to GPU? — GPU programming: why, when and how? documentation, accessed February 9, 2025, https://enccs.github.io/gpu-programming/3-gpu-problems/

27. GPU vs CPU for inference : r/learnmachinelearning – Reddit, accessed February 9, 2025, https://www.reddit.com/r/learnmachinelearning/comments/1aubc4u/gpu_vs_cpu_for_inference/

28. [D] Should I Get a MacBook or a Laptop with an NVIDIA GPU for Machine Learning and AI? : r/MachineLearning – Reddit, accessed February 9, 2025, https://www.reddit.com/r/MachineLearning/comments/1e7a306/d_should_i_get_a_macbook_or_a_laptop_with_an/

29. Large Language Models (LLMs): Technology, use cases, and challenges – Swimm, accessed February 9, 2025, https://swimm.io/learn/large-language-models/large-language-models-llms-technology-use-cases-and-challenges

30. 8 Challenges Of Building Your Own Large Language Model – Labellerr, accessed February 9, 2025, https://www.labellerr.com/blog/challenges-in-development-of-llms/

31. How to train a Large Language Model using limited hardware? – deepsense.ai, accessed February 9, 2025, https://deepsense.ai/blog/how-to-train-a-large-language-model-using-limited-hardware/

32. Future Trends in GPU Technology | DigitalOcean, accessed February 9, 2025, https://www.digitalocean.com/community/conceptual-articles/future-trends-in-gpu-technology

33. Hardware Recommendations for Large Language Model Servers – Puget Systems, accessed February 9, 2025, https://www.pugetsystems.com/solutions/ai-and-hpc-workstations/ai-large-language-models/hardware-recommendations-2/

34. 8 Top Open-Source LLMs for 2024 and Their Uses – DataCamp, accessed February 9, 2025, https://www.datacamp.com/blog/top-open-source-llms

35. Top 10 Open-Source LLM Frameworks 2024 – Large Language Models Directory – All LLMs, accessed February 9, 2025, https://llmmodels.org/blog/top-10-open-source-llm-frameworks-2024/

36. Top 5 Trending Open-source LLM Tools & Frameworks You Must Know About, accessed February 9, 2025, https://dev.to/guybuildingai/top-5-trending-open-source-llm-tools-frameworks-you-must-know-about-1fk7

37. [2401.09796] A Fast, Performant, Secure Distributed Training Framework For Large Language Model – arXiv, accessed February 9, 2025, https://arxiv.org/abs/2401.09796

38. Beyond ChatGPT: A Guide to Alternative Large Language Models – Teneo.Ai, accessed February 9, 2025, https://www.teneo.ai/blog/beyond-chatgpt-a-guide-to-alternative-large-language-models

39. MegaScale: Scaling Large Language Model Training to More Than 10,000 GPUs – arXiv, accessed February 9, 2025, https://arxiv.org/html/2402.15627v1

40. Accelerating Large Language Model Inference | Case Study – NVIDIA, accessed February 9, 2025, https://www.nvidia.com/en-us/case-studies/perplexity/

41. AI-Powered Language Translation | Case Study – NVIDIA, accessed February 9, 2025, https://www.nvidia.com/en-us/case-studies/lilt/

42. MegaScale: Scaling Large Language Model Training to More Than 10000 GPUs – arXiv, accessed February 9, 2025, https://arxiv.org/abs/2402.15627

43. Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM | Jared Casper – YouTube, accessed February 9, 2025, https://www.youtube.com/watch?v=gHaNUcS1_O4

44. Awesome-LLM: a curated list of Large Language Model – GitHub, accessed February 9, 2025, https://github.com/Hannibal046/Awesome-LLM

45. What are the Hardware Requirements for Large Language Model (LLM) Training?, accessed February 9, 2025, https://www.appypie.com/blog/hardware-requirements-for-llm-training