How transformer architecture in AI works?

The represents a significant leap in artificial intelligence (AI) and deep learning, particularly in natural language processing (NLP). Introduced in 2017 by researchers at Google in the groundbreaking paper “Attention is All You Need,” this architecture departed from the traditional recurrent neural networks (RNNs) and convolutional neural networks (CNNs) by employing a novel mechanism called self-attention, which allows the model to weigh the importance of different elements in the input data simul- taneously rather than sequentially[1][2]. This innovation not only enhanced computa-tional efficiency but also expanded the model’s ability to capture long-range depen- dencies within data, making Transformers the backbone of numerous state-of-the-art AI applications[3].

Transformers have revolutionized the field of AI with their scalability and versatility, leading to the development of large-scale pretrained models, often referred to as foundation models. These include notable models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), which have set new performance benchmarks in various NLP tasks such as language translation, text summarization, and question answering[4][5]. The ability to pretrain these models on vast datasets and fine-tune them for specific tasks has made them indispensable in both research and industry applications, ranging from automated customer support to advanced research in computational linguistics[6].

Despite their success, Transformer models are not without challenges. Training these models requires significant computational resources, often necessitating specialized hardware like GPUs and TPUs to handle the extensive data and complex operations efficiently[7]. Moreover, the architecture’s complexity can lead to issues like training instability and overfitting, prompting continuous research into optimization techniques and more efficient training methods. Innovations such as Layerwise Learning Rate Decay (LLRD) and the development of more efficient variants like the Switch Trans- former aim to address these challenges, pushing the boundaries of what these models can achieve[8][9].

The impact of Transformer architecture extends beyond NLP into other domains such as computer vision, audio processing, and robotics, showcasing its robustness and adaptability[10]. Its ability to process and generate high-quality data has led to advancements in various fields, demonstrating the model’s broad applicability and potential for future innovations. As the AI community continues to explore and refine Transformer models, their role in driving forward the capabilities of artificial intelligence remains pivotal, promising exciting developments and applications in the years to come[11].

Historical Context

The journey of transformer architecture in artificial intelligence (AI) can be traced back to several pivotal developments in neural network models. One of the earliest notable architectures was the Neocognitron, which introduced the concepts of feature extraction, pooling layers, and convolution in neural networks, primarily inspired by the visual nervous system of vertebrates[1]. This set the groundwork for subsequent advancements in neural network design, including convolutional neural networks (CNNs).

Transformers themselves were first introduced in a seminal paper by Google in June 2017, titled “Attention is All You Need”[2][3]. The original focus of the transformer research was on translation tasks, employing an encoder-decoder architecture that, unlike previous models relying on recurrent neural networks (RNNs), completely lacked recurrency[4][2]. This paradigm shift allowed transformers to process longer sequences of data in parallel, as opposed to the sequential data processing con- straints of RNNs, thereby making transformers more computationally efficient and scalable[5].

The adoption and evolution of transformers accelerated quickly, with notable compar- isons highlighting their efficiency. For instance, co-author Illia Polosukhin compared the transformer architecture to the fictional alien language in the 2016 science fiction movie “Arrival,” where complex symbols conveying detailed meanings are produced all at once rather than sequentially[6]. This analogy underscores the efficiency of transformers in processing information.

Transformers have driven significant breakthroughs in machine learning and AI, transitioning from neural machine translation models to foundational models that un- derpin various advanced applications. Their ability to be pretrained on large datasets has set them apart from traditional architectures like RNNs and CNNs, leading to more powerful and generalizable AI models[7]. These advancements have been instrumental in the development of cutting-edge models like OpenAI’s ChatGPT and DeepMind’s AlphaStar, marking a new wave of excitement and progress in the AI community[2].

The rapid ascent of transformer models coincided with the rise of large-scale pre- trained models, often referred to as foundation models, further widening the gap between transformers and traditional architectures[7]. As transformers continue to evolve, they are gradually usurping previously dominant types of deep learning neural network architectures across a variety of applications, solidifying their place as the state-of-the-art in natural language processing and beyond[5][8].

Core Concepts and Components

Transformer Architecture

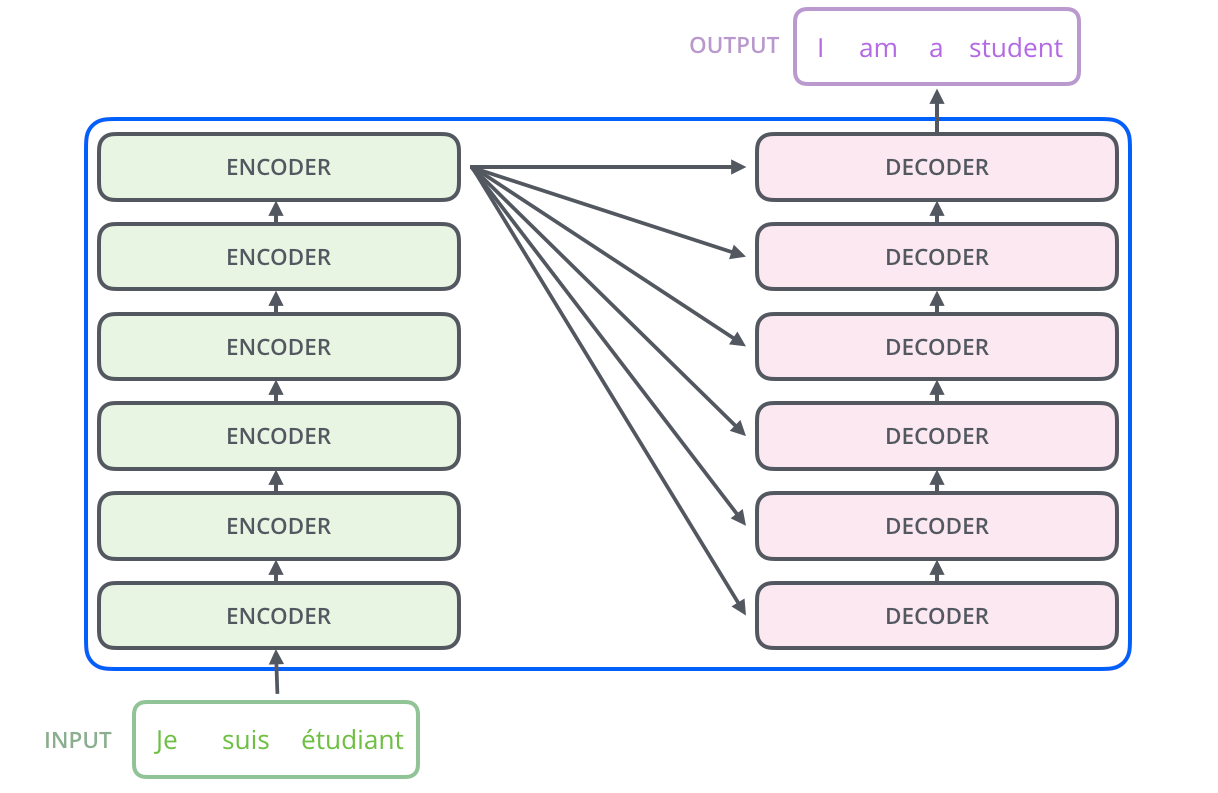

The Transformer architecture is a deep learning model that has revolutionized nat- ural language processing (NLP) and other AI tasks. At its core, it consists of an encoder-decoder structure designed for sequence-to-sequence tasks, such as lan- guage translation. The encoder takes an input sentence and generates a fixed-size vector representation, which is then fed into the decoder to produce the output sentence [8]. This architecture allows the model to handle various tasks efficiently, such as text classification and language translation, as demonstrated by models like Google Translate’s T5 and Facebook’s M2M-100 [8].

Self-Attention Mechanism

A fundamental component of the Transformer architecture is the self-attention mech- anism. Unlike traditional attention mechanisms that rely on recurrence or convolu- tions, self-attention enables the model to weigh the importance of different words in a sentence based on their relationships with each other [8][9]. This mechanism is crucial for understanding the dependencies and context within the input sequence, allowing the model to make more accurate predictions [10]. Self-attention allows the Transformer to attend to different parts of the same input sequence, providing a more holistic understanding of the data [10].

Positional Encoding

One limitation of the Transformer architecture is its inability to inherently capture the relative positions of words in a sequence, as it does not utilize recurrence. To address this, positional encodings are introduced to the input embeddings, injecting information about the order of words in the sequence [11]. These positional encoding vectors are generated using sine and cosine functions of different frequencies, ensuring that the model can distinguish between different positions in the input [11].

Residual Connections

To enhance the flow of information through the layers, the Transformer architecture incorporates residual connections. These connections allow the information from the input, which contains positional embeddings, to propagate efficiently to deeper layers where more complex interactions occur [12]. Residual connections help stabilize training and improve the performance of the model by ensuring that gradients can flow through the network without vanishing or exploding [12].

Layerwise Learning Rate Decay (LLRD)

Another technique used to stabilize the training of Transformer models is Layerwise Learning Rate Decay (LLRD). Instead of using a uniform learning rate across all layers, LLRD assigns specific learning rates to each layer. This approach helps in fine-tuning the model more effectively, especially when adapting pre-trained models to new tasks [13]. By carefully tuning the learning rates, LLRD can improve the training dynamics and performance of the model [13][14].

Attention Entropy

Training stability is a critical aspect of Transformer models. One way to measure and ensure stability is by tracking the attention entropy for each attention head during training. Attention entropy serves as a proxy for model sharpness, providing insights into the evolution of the attention layers and helping to diagnose potential issues during training [15].

Applications and Real-World Use

The Transformer architecture’s versatility and effectiveness have led to its adoption in various real-world applications. Notably, it is used in machine translation systems like Google Translate and Facebook’s M2M-100, which can handle translations between multiple languages [8]. Additionally, Transformers are employed in tasks such as text classification, sentiment analysis, and spam detection, showcasing their broad applicability in NLP [8].

Detailed Operation

The Transformer architecture consists of two primary components: the encoder and the decoder. Each plays a crucial role in the model’s ability to process and generate sequences.

Encoder

The encoder is tasked with transforming the input tokens into contextualized repre- sentations. Unlike earlier models that processed tokens independently, the Trans- former encoder captures the context of each token with respect to the entire se- quence[2]. The embedding layer in the bottom-most encoder converts input tokens, such as words or subwords, into vectors using embedding layers. These vectors are dense representations that capture the semantic and syntactic properties of the words, and their values are learned during the training process[16].

One crucial detail is that each sub-layer within each encoder, whether it is a self-attention mechanism or a feed-forward neural network (FFNN), has a residual connection around it. This is followed by a layer-normalization step, which helps mitigate the problem of vanishing gradients, a common issue in deep neural network training[17][16].

Decoder

The decoder’s primary role is to generate an output sequence by attending to both its own previous outputs and the encoder’s representations[18][11]. Each decoder consists of three major components: a self-attention mechanism, an attention mech- anism over the encodings, and a feed-forward neural network[18]. The first decoder takes positional information and embeddings of the output sequence as its input, rather than encodings, and the output sequence must be partially masked to prevent reverse information flow. This masking allows for autoregressive text generation[18]. Attention mechanisms in the decoder ensure that the model doesn’t place attention on subsequent tokens, maintaining the sequence order[18]. The last decoder layer is followed by a final linear transformation and a softmax layer to produce the output probabilities over the vocabulary[18].

Positional Encoding

Transformers inherently lack a mechanism to capture the sequential order of data. Therefore, positional encoding is introduced to inject order information into the model. The positional encoding vectors are of the same dimension as the input embeddings and are generated using sine and cosine functions of different frequencies. These vectors are summed with the input embeddings to provide unique signals to each position, ensuring distinct representations for identical tokens at different positions[- 19][20].

Applications and Use Cases

The Transformer architecture is employed in various applications beyond natural language processing, including computer vision, audio, multi-modal processing, and robotics[18]. It has led to the development of pre-trained systems like generative pre-trained transformers (GPTs) and BERT (Bidirectional Encoder Representations from Transformers), which are adapted for a wide range of NLP tasks, such as language translation and text classification[7][8]. Real-world implementations include models like Google Translate’s T5 and Facebook’s M2M-100 for multilingual transla- tion[8]. These pre-trained models are often fine-tuned on specific tasks by adapting the output layers to suit the downstream applications[7].

Transformer Models in Action

Transformer models have revolutionized various fields within artificial intelligence and machine learning by introducing a highly effective mechanism known as attention or self-attention. This mechanism allows the model to learn the context and meaning of words in a sequence by identifying the relationships between them, even when the words are far apart in the sequence[3]. Introduced in 2017, transformer models have quickly become foundational in natural language processing (NLP) tasks[21], ranging from machine translation to question answering and more.

A key feature of transformer models is their use of attention layers, which are designed to focus on specific parts of the input data when processing each word or token in a sequence[22]. This attention mechanism significantly enhances the model’s ability to understand context and generate more accurate representations of the input data. For example, when translating text from one language to another, the attention layers help the model to effectively weigh the importance of each word, leading to higher quality translations[23].

The introduction of the transformer architecture marked a significant departure from previous neural network architectures, such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs), which relied heavily on recurrence and convolutions to process sequential data[9]. The transformer model, as described in the seminal paper “Attention Is All You Need” by Vaswani et al., achieved state-of-the-art results in several translation tasks and required significantly less computational resources and training time compared to its predecessors[24].

One of the most impactful applications of transformer models is in the development of large language models (LLMs), such as GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers)[18]. These pre-trained systems leverage vast datasets, like the Wikipedia corpus and Common Crawl, to generate highly coherent and contextually relevant text. Beyond NLP, trans- former models are also being applied in fields like computer vision, audio processing, and robotics, showcasing their versatility and robustness[18].

The transformer architecture’s success can be largely attributed to its self-attention mechanism, which allows the model to weigh the significance of different words in a sentence regardless of their positional order[19]. This capability is further enhanced by components such as embeddings and positional encodings, which convert the input sequence into a mathematical format that the model can process and learn from[20]. The embeddings represent discrete tokens as continuous vectors, enabling the model to understand and calculate the relationships between words in a more nuanced manner.

Training and Optimization Challenges

Training transformer models involves several techniques and considerations that are crucial for their performance. One primary challenge is the sequential computation of each component of an input, which can be time-consuming, especially when the input sequences are long. This can also impact the model’s ability to retain context over extended sequences, leading to limitations in performance[21].

Optimizing transformer models typically involves the use of algorithms such as Adam or stochastic gradient descent (SGD). These optimizers play a pivotal role in the training of deep learning models, helping to minimize the loss function and improve the model’s accuracy over time[21][25]. However, training stability remains a significant concern, as transformers can exhibit instability during training, which can be mitigated through various strategic pretraining methods and optimization techniques[14][25].

Another challenge is the computational resources required for training large trans- former models. They often necessitate significant computational power, memory, and storage capacity, making it difficult for IT departments to support AI model training without adequate hardware and software infrastructure[26][27]. Additionally, the use of graph execution is recommended for large model training to handle complex operations efficiently, whereas eager execution may be more suitable for smaller models[28].

Moreover, the process of selecting appropriate datasets for training transformers is critical. It is essential to find external datasets that closely match the given task and data, which can then be used to train the base transformer model like BERT, saving the model weights for future use[14]. Batch size, learning rate, warmup steps, maximum sentence length, and checkpoint averaging are practical parameters that need careful tuning to improve training efficiency and performance[29].

Despite these challenges, transformer models bring significant advantages to various Natural Language Processing (NLP) tasks, such as question answering, sequence classification, and named entity recognition[14]. Nevertheless, their training and infer- ence times can be prohibitively slow for some applications, necessitating continuous research and development to enhance their efficiency and scalability[27].

Advancements and Innovations

The transformer architecture has significantly transformed the landscape of artifi- cial intelligence, driving numerous advancements and innovations across various domains. Initially introduced in the seminal 2017 paper “Attention is All You Need” by researchers at Google, the transformer architecture has become the backbone of many groundbreaking models in natural language processing (NLP) and beyond[2]- [3].

One of the most impactful advancements facilitated by transformers is the devel- opment of large-scale pretrained models, often referred to as foundation models. These models leverage the transformer architecture to pretrain on vast datasets, enabling them to adapt to a variety of downstream tasks with minimal fine-tuning. Examples include BERT, GPT-2, GPT-3, and ELECTRA, which have set new perfor- mance standards in NLP tasks such as text generation, summarization, and question answering[7][18].

Transformers have also revolutionized the training and application of deep neural networks by employing self-attention mechanisms. This allows the model to weigh the importance of different elements in the input data, leading to better contextual understanding and performance stability[30][19]. These advancements have not only improved NLP but have also extended to other fields such as computer vision, audio processing, and even robotics, demonstrating the versatility and robustness of the transformer architecture[31][18].

Another notable innovation is the introduction of specialized techniques and optimiza- tions that enhance the efficiency and effectiveness of transformer models. For exam- ple, T-Fixup, an extension of Fixup Initialization, optimizes the initialization strategy for transformers, enabling their training without the need for layer normalization. This results in a simpler and more efficient training process[25].

Furthermore, transformers have played a pivotal role in the development of state-of-the-art AI systems like OpenAI’s GPT series and DeepMind’s AlphaStar, which leverage the architecture for tasks ranging from natural language understand- ing to strategic game playing[2][21]. These applications underscore the transforma- tive potential of the transformer architecture in advancing the field of AI and setting new benchmarks for machine learning models.

Looking Forward

The future of Transformer architecture in AI is marked by continuous advancements and refinements aimed at leveraging its full potential. One significant area of focus is advanced NLP model training, which involves leveraging transfer learning, mitigating instability, and employing strategic pretraining methods[14]. This focus is crucial for improving the efficiency and performance of Transformers, especially when dealing with long pieces of text where context from far back can influence the meaning of subsequent text[20].

The evolution of Transformers is also closely linked with the advent of large-scale pretrained models, commonly referred to as foundation models[7]. These models benefit from scaling to multiple GPUs, optimizing training parameters like batch size, learning rate, and maximum sentence length, as well as employing techniques like checkpoint averaging to enhance training outcomes[29]. Such practical tips help tailor the training process to specific hardware and data constraints, thereby improving the results.

In addition to improvements in NLP, the application of Transformers has expanded into other domains like image processing. Despite their strong performance and ease of training, the computational expense of Transformers when applied to high-res- olution images remains a challenge[32]. However, innovations like AI sparsity and mixture-of-experts (MoE) architectures are driving performance gains, particularly in language processing, with models like the Switch Transformer boasting up to a trillion parameters[3].

Furthermore, the efficiency and scalability of Transformers, which allow them to handle much larger datasets and incorporate many more parameters than previous architectures, underline their power and generalizability[6]. The ability to parallelize sequential data processing has also been a game-changer, enabling effective utiliza- tion of GPUs and speeding up the training process[31].

Looking ahead, the combination of sophisticated model architectures and ad- vanced training techniques promises to push the boundaries of what Trans- former-based models can achieve. Whether it’s through improved computational efficiency, larger-scale models, or novel applications across various AI domains, the future of Transformers in AI is poised for continued innovation and impact.

References

[1]: Convolutional Neural Networks: A Brief History of their Evolution | by Brajesh

Kumar | AppyHigh Blog | Medium

[2]: How Transformers Work: A Detailed Exploration of Transformer Architecture |

DataCamp

[3]: What Is a Transformer Model? | NVIDIA Blogs

[4]: KiKaBeN – Transformer’s Encoder-Decoder

[5]: What is a Transformer Model? | Definition from TechTarget

[6]: Transformers Revolutionized AI. What Will Replace Them?

[7]: 11. Attention Mechanisms and Transformers — Dive into Deep Learning 1.0.3

documentation

[8]: A Comprehensive Overview of Transformer-Based Models: Encoders, Decoders,

and More | by Minhajul Hoque | Medium

[9]: The Transformer Attention Mechanism – MachineLearningMastery.com

[10]: What’s the Difference Between Self-Attention and Attention in Transformer Archi-

tecture?

[11]: The Transformer Model – MachineLearningMastery.com

[12]: Transformer Architecture: The Positional Encoding – Amirhossein Kazemnejad’s

Blog

[13]: Your Transformer needs a Stabilizer | by Vignesh Baskaran | Medium

[14]: Tips and Tricks to Train State-Of-The-Art NLP Models

[15]: Stabilizing Transformer Training by Preventing Attention Entropy Collapse – Apple

Machine Learning Research

[16]: Transformer Model: 6 Key Components & Training Your Transformer

[17]: The Illustrated Transformer

[18]: Transformer (deep learning architecture) – Wikipedia

[19]: Positional Encoding in Transformers – GeeksforGeeks

[20]: What are Transformers? – Transformers in Artificial Intelligence Explained – AWS

[21]: What is a Transformer Model? | IBM

[22]: How do Transformers work? – Hugging Face NLP Course

[23]: Neural Machine Translation with Transformers | by Gal Hever | Medium

[24]: Transformer: A Novel Neural Network Architecture for Language Understanding

[25]: Efficiently Training Transformers: A Comprehensive Guide to High-Performance

NLP Models

[26]: 6 Common AI Model Training Challenges | Oracle Nigeria

[27]: Demystifying Transformers Architecture in Machine Learning

[28]: Training the Transformer Model – MachineLearningMastery.com

[29]: [1804.00247] Training Tips for the Transformer Model

[30]: Attention Mechanism in the Transformers Model | Baeldung on Computer Science

[31]: Transformer Neural Networks: A Step-by-Step Breakdown | Built In

[32]: Evolution of Convolutional Neural Network Architectures | by Aaryan Gupta | The

PEN Point | Medium